How do temperature and environment affect testing results?

How do temperature and environment affect testing results?

Introduction

Testing isn’t just about the code or the models; it’s about the whole ecosystem that surrounds your experiments. In the fast-moving web3 finance world, where multi-asset trading spans forex, stocks, crypto, indices, options, and commodities, the ambient temperature and the surrounding environment can tilt the numbers you rely on. I’ve spent years testing trading bots, data feeds, and DeFi protocols, and I’ve learned that even small shifts in temperature, humidity, airflow, or power stability can ripple through latency, data integrity, and decision timing. If you want reliable insights, you have to treat the test lab as part of the system you’re evaluating—not just a backdrop.

Body

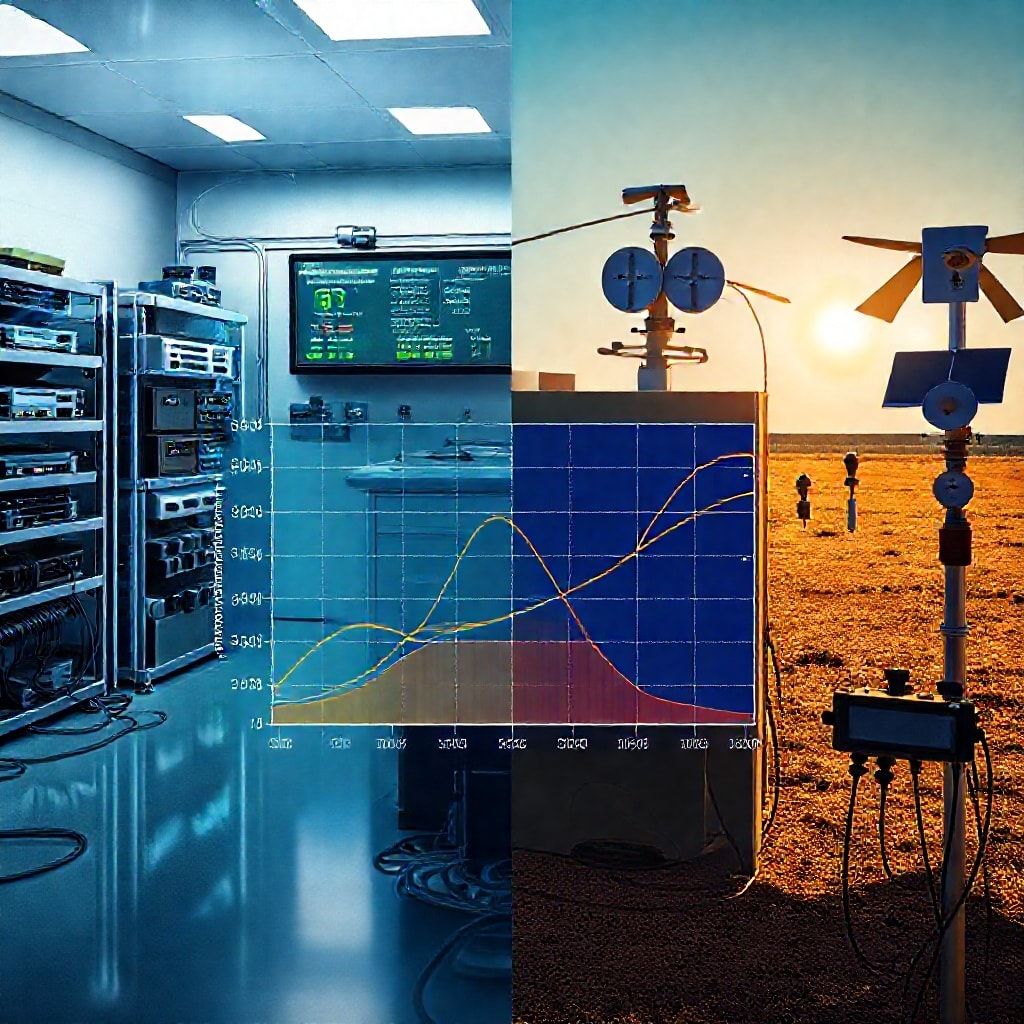

Temperature and hardware performance: how heat changes speed and accuracy

- Thermal throttling changes everything. When CPUs, GPUs, and accelerators overheat, they throttle clock speeds, which slows down backtests, ML inferences, and real-time decisioning. A few degrees of variance can shift execution times enough to distort performance metrics, especially in high-frequency-like setups where milliseconds matter.

- Battery and edge devices aren’t immune. Mobile wallets, IoT nodes, or edge collectors used for on-chain data gathering lose capacity and stability as temps rise. That can show up as dropped samples, intermittent connectivity, or skewed latency measurements—all of which can mislead when you’re evaluating reliability or risk.

- Data centers aren’t perfect islands. Even well-ventilated rooms have hotspots, fan noise, and cooling cycles that nudge power delivery and thermal throttling. If you run a suite that simulates market stress or latency under different loads, keeping a stable ambient temperature helps you separate software issues from hardware ramps.

- Calibration matters. Measurement tools—oscilloscopes, network analyzers, time-sync devices—can drift with temperature. If your test harness is temperature-sensitive, you’ll see artificial variance in latency, jitter, packet loss, or throughput.

Environment factors beyond temperature: humidity, EMI, and physical setup

- Humidity and corrosion risk. In some test labs or data rooms, high humidity accelerates corrosion on connectors or boards, subtly affecting signal integrity over time. It’s not about one test; it’s about the cumulative effect on a test bench you rely on for long-running validation.

- EMI and RF interference. Wireless nodes, co-located servers, or shared spaces can pick up electromagnetic noise that distorts timing signals or data integrity checks. For trading systems that depend on precise timestamping and synchronized feeds, this can masquerade as a bug or a bug as a feature.

- Vibration and rack layout. Dense racks and heavy hardware can vibrate during cooling cycles or maintenance, nudging cables and connectors enough to affect latencies or occasional sample drops. Proper cable management and vibration isolation aren’t flashy, but they matter when you’re benchmarking microsecond-level performance.

- Airflow patterns and hot spots. Poor airflow can create uneven cooling, pushing some components into throttling while others stay calm. In experiments that compare multiple configurations, identical airflow and intake conditions help you compare apples to apples.

Why this matters for web3 finance testing: multi-asset reality checks

- Across asset classes, data feed latency, order routing, and market microstructure shape results. A backtest may look great, but if live data feeds lag under certain temperatures, you’ll see slippage that your test didn’t predict. This is especially true for crypto markets where block times and mempool behavior interact with network conditions.

- Parity between test and production environments is the border between insight and illusion. Use the same OS images, libraries, Python/Node versions, seeds, and data sampling rates in tests as you do in production. Time synchronization (NTP/PTP) should be rock solid so you’re not chasing phantom delays caused by clock drift.

- A practical lab recipe: simulate a range of environmental conditions (temperature, humidity, network load) and capture how performance metrics shift. Then run parallel live-fire tests on a small scale to verify that your controlled testing aligns with real-world behavior.

Reliability, risk, and a few practical guardrails

- Embrace test environment parity. Your “production-like” environment should mirror production’s software stack, data fidelity, and latency profiles. If you tweak the environment for speed, document it and quantify how those tweaks might bias results.

- Track environmental metrics alongside test results. Log ambient temp, humidity, airflow rate, power supply voltage, and clock drift during every run. The correlation data itself becomes a part of the reliability story.

- Use resilience tests. Include tests that deliberately push temperature ranges, data quality disruptions, and network variability. These tests help reveal failure modes that only appear under stress, which is exactly what you want to preempt before real capital is on the line.

- Data quality over pretend precision. In finance, the value of a test is not just the number you get, but how far it is from the live system under comparable conditions. When your test data or feed is inconsistent due to environmental quirks, the takeaway should be “fix the data path” rather than “adjust the model.”

Reliability-enhanced leverage and risk-aware strategies

- Leverage considerations under environmental variance. If you’re using leverage in a testing context (backtests, paper trading with margin-like exposure, or simulated DeFi loan/risk scenarios), include environmental stress steps. For example, stress test with latency spikes, occasional data gaps, and modest temperature-induced performance dips to see if your risk controls still hold.

- Build conservative risk budgets for live deployment. Calibrate a margin or liquidity cushion that accounts for potential performance degradation during suboptimal environmental conditions. A buffer reduces the chance of cascade failures in execution or liquidity crunches during volatile periods.

- Real-time monitoring as a moat. Have dashboards that show environmental sensors alongside trading metrics (latency, fill rate, slippage, error rate). If the environment drifts, auto-notify and, if appropriate, throttle order flow or switch to a safer trading profile.

- Redundancy and edge computing. For latency-sensitive strategies, consider co-located or geographically diverse data paths and edge nodes that reduce exposure to a single environmental point of failure. This isn’t glamorous, but it pays off when temps spike or a single rack hiccups.

Advanced tech, security, and charting: pairing tests with tools

- Oracles, on-chain data, and test integrity. DeFi testing depends on reliable oracles and data feeds. Temperature and environment can affect data producers and the timing of updates. Validate the end-to-end chain from data generation to on-chain settlement under varied conditions.

- Charting tools and analytics. When you chart performance, plot environmental variables as well. Correlating heat or network load with latency or slippage helps you spot non-obvious culprits rather than chasing random variance.

- Security mindset in test labs. Use hardware security modules, secure enclaves, and tamper-evident logging in test environments to prevent contamination of results. Production-level security should be reflected in your testing regime so you’re not surprised by a security edge case when going live.

- DeFi development and governance tests. Smart contracts, liquidity pools, and cross-chain bridges are sensitive to timing and data reliability. Test across network delays and variable data conditions to gauge how robust the contracts are to real-world noise.

DeFi today: promises, challenges, and a realistic path forward

- Growth with friction. DeFi continues to mature with better tooling, audits, and open protocols. The promise is faster settlement, cheaper liquidity, and programmable capabilities that empower traders to automate complex strategies. The challenge remains protocol risk, oracle reliability, and cross-chain friction that environmental conditions can amplify.

- Transparency as a weapon. The more you reveal about environmental conditions and testing methodology, the more confident users become. Publish test setups, sensor logs, and anonymized dashboards to help users understand why results may vary and how you’re mitigating those variables.

- Smart contract testing, formal verification, and AI-assisted QA. The future points toward stronger formal methods and AI-guided test generation that accounts for environmental drift. Expect more integrated toolchains that simulate hardware throttling, network jitter, and data feed anomalies within smart contract testbeds.

Future trends: smart contracts, AI-driven trading, and what to expect

- AI-driven test optimization. AI can help identify which environmental conditions most affect results and prioritize where to allocate test coverage. Think adaptive test matrices that learn from prior runs and propose the next best stress scenario.

- Smart contracts as first-class testable entities. Beyond unit tests, end-to-end simulation that includes price feeds, oracles, and on-chain liquidity flow will become standard practice. You’ll want testnets that mimic real-world delays and data quality dynamics to validate contract behavior under imperfect data.

- AI-powered risk controls. In live trading, AI models that adjust risk parameters in real time based on environmental signals (temperature, latency, data quality) could help keep drawdowns within bounds without sacrificing opportunity.

- Security-by-design in the era of DeFi 2.0. Expect more emphasis on robust key management, on-chain governance tests, and automated security scans woven into CI/CD for contracts and orchestration layers.

Promotional slogans you can use to resonate with readers

- Cool temps, clear signals.

- Temperature-under-control testing for rock-solid results.

- When the lab stays calm, your trades stay sharp.

- Trust the environment you test in—and the results you publish.

- Build resilience in reality, not just in theory.

Putting it all together: a practical mindset for traders and testers

- Treat the lab as part of the system. Your testing results gain credibility when you show how environmental factors were measured and mitigated.

- Prioritize parity between test and production. Even small gaps in software, data, or hardware configurations become big gaps in the field.

- Balance ambition with realism. Leverage and AI-driven tools are powerful, but they perform best when their limits are understood in the context of temperature, humidity, and network realities.

- Communicate clearly about variability. Don’t sweep the noise under the rug. Document variability sources, the steps you took to reduce them, and what remains outside your control.

A closing thought for traders and builders

If you’re building or testing in web3 finance, remember this: the best insights come from integrating physics with algorithms. Temperature and environment aren’t nuisances to be ignored; they’re data points that tell you how the system behaves under pressure. When you design your tests with that mindset, you don’t just chase better numbers—you gain trustworthy signals you can act on across forex, stocks, crypto, indices, options, and commodities. And that clarity is what turns good testing into reliable trading.

If you’re curious about how your current testing setup stands up to real-world environmental variation, I’m happy to help map out a lightweight environment-monitoring plan and a starter test matrix that covers the assets you care about, the data feeds you rely on, and the hardware you can access. After all, cool heads and precise data can unlock hotter, more confident trading decisions.

YOU MAY ALSO LIKE